Thank you for letting us know about your interest!

Below, you will find a selection of content curated for you.

From our team of forward-thinking researchers, the latest in academic insights on language and technology: a deep dive into ideas on the brink of change about large language models (LLM), machine translation (MT), text-to-speech, and more.

D-CPT Law: Domain-specific Continual Pre-Training Scaling Law for Large Language Models

To specialize a general-purpose language model on a target domain, extending the model’s pre-training through adaptation on a data mixture having domain-specific data can be a crucial step. For this stage, it is important to determine an optimal mixture ratio (of the adaptation data in the training data) under a fixed compute budget. To avoid incurring expensive searches for the practically optimal data mixture ratio, the authors devise a scaling law to predict the resultant validation loss as a function of the model size, training data size, and the mixture ratio. They also devise a way to extend the scaling law for cross-domain adaptation, which entails adaptation on one domain followed by inferencing on a different domain. They demonstrate the effectiveness of the law in predicting the validation loss trends for a model of a given size.

Read the full paper here

Reversing the Forget-Retain Objectives: An Efficient LLM Unlearning Framework from Logit Difference

The strong knowledge retention capabilities of LLMs are well-known, with their associated privacy and copyright risks. The research field of “model unlearning” works on training a model to “forget” some documents from the training data, while teaching it to “retain” the rest, from which the model is expected to not retain any knowledge from the “forget” part while preserving its utility otherwise. In this work, the authors discuss the problems of degeneration and catastrophic forgetting associated with the previous methods and propose their LLM-based framework to track a target model’s learning and unlearning. They train an assistant LLM on the opposite objective (learning “forget”, forgetting “retain”) which then controls the learning loss of the target LLM to be trained. They show the effectiveness of this method on the aforementioned issues while also demonstrating more stable and efficient training.

Read the full paper here

Convolutional Differentiable Logic Gate Networks

A continuous proliferation of research in deep learning methods has resulted in ever-increasing applications and business opportunities. Among a wave of recent works that have brought forth a dramatic rise in scale, capability, and efficiency of models, few works instead seek to redefine the fundamentals that compose these technologies. In this work, the authors demonstrate a promising paradigm of efficient deep learning methods to reduce computational and energy costs drastically while maintaining performance. Extending their previous work, they formulate CNN-like neural networks solely built using deep logic gate operations such as NAND, OR, and XOR. They tie their evaluation down to hardware efficiency, demonstrating an increase in speed-up to 1900x while maintaining the SoTA performance level on a machine vision problem.

Read the full paper here

RHO-1 – Not All Tokens Are What You Need

Diverging from the standard “causal language modeling” used to pre-train language models in which every token is affected with the same amount of loss, this work instead proposes a “selective language modeling” approach that entails identifying and removing “unimportant” tokens from the loss. The authors, showing the presence of irregularities in token-wise losses on continual pretraining of LLMs, propose this selective strategy for a more effective domain adaptation. Focusing on the mathematics domain, they first train a reference language model on a high-quality corpus. High perplexity tokens as perceived by this reference are then removed from loss calculation while training the target model. In evaluation, they show improvement on seven benchmarks of math reasoning.

Read the full paper here

Would you give us a short feedback about our newsletter?

From top newspapers, research publications, and leading magazines from around the world.

Do AI models produce more original ideas than researchers?

Can AI generate more original ideas than researchers? A recent study by arXiv compared the work of 100 independent scientists with the 4,000 papers produced by Claude 3.5, which were then evaluated by neutral reviewers. The outcome might surprise you!

Read the full article on Nature

We’re Entering Uncharted Territory for Math

In an interview with The Atlantic, renowned mathematician Terence Tao explained that AI won’t replace human mathematicians but will serve as a valuable collaborator. Tao sees AI assisting with preliminary problem-solving, allowing mathematicians to focus on more complex aspects and explore new mathematical frontiers.

Read the full interview on The Atlantic

AI Is Driving India’s Next Agricultural Revolution

India’s agricultural landscape is at a crossroads. Despite being a cornerstone of the economy with 65% of the population involved, inefficiencies and debt plague farmers. The nation is testing transformative tech solutions that could redefine farming across emerging economies. Initiatives like IoT devices promise precision agriculture, but challenges remain in accessibility and implementation. India’s journey could set global standards for innovation in agriculture, balancing technological advances with equitable practices.

Read the full article on IEEE Spectrum

Stories from the Imminent section dedicated to Technology

AI and the limits of language

Authors Jacob Browning and Yann LeCun focus on language models and explain why any technology-based tool trained on words and sentences alone will never reach a human level of understanding.

The Path to LLM-based Machine Translation

Kirti Vashee dives into the topic of the year, Large Language Models, and their application in the world of international commerce and translation. This article provides an in-depth analysis of the opportunities and current challenges this technology faces and a prediction of its emerging use in the near future.

Do you speak mathematics?

What is the thread that connects us with what we do not yet know? Ersilia Vaudo discusses the intrinsic language of the universe: mathematics.

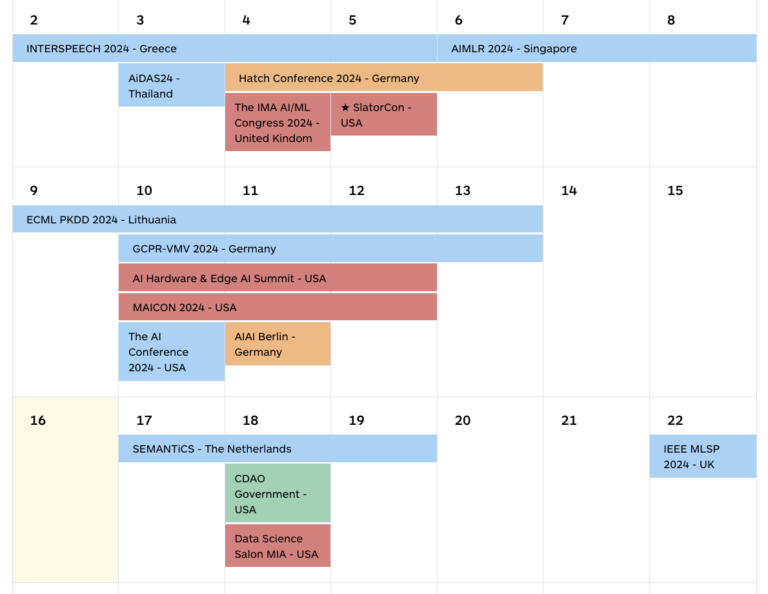

AI and Language Events Calendar

A curated selection of the most important events globally

From groundbreaking research to industry-shifting innovations, find every key AI event gathered in one powerful calendar.

Check it outStories from the Imminent section dedicated to Research

How Language Connects

Martina Ardizzi and Stefana Garello have explored embodied minds when speaking in a multilingual mode and they have seen how brains synchronise while processing the language.

What makes speech sound “human”?

Synthetic speech is everywhere and Siri’s or Alexa’s voices take more and more space in our living rooms. Text-to-speech (TTS) tools aim at creating intelligible and realistic voices. With TTS systems quickly getting better, are we still able to distinguish TTS voices from human ones? If yes, how? A team of researchers from the Max Planck Institute for Empirical Aesthetics combine interdisciplinary expertise to answer these questions with the support of an Imminent grant.

Bilingual brain interhemispheric interactions

Have you ever wondered how people who speak two languages manage to switch between them so easily? Scientists are exploring this fascinating ability, and they’re discovering some interesting things about how our brains handle multiple languages.

Imminent Research Grants

$100,000 to fund language technology innovators

Imminent was founded to help innovators who share the goal of making it easier for everyone living in our multilingual world to understand and be understood by all others. Each year, Imminent allocate $100,000 to fund five original research projects to explore the most advanced frontiers in the world of language services. Topics: Language economics – Linguistic data – Machine learning algorithms for translation – Human-computer interaction – The neuroscience of language.

Apply nowWhile the future may not be evenly distributed, at every moment, somewhere in the world, a window to the future opens. And it speaks in the local language of those who gaze through it, looking ahead. Imminent listens to the experts, interprets the numbers, and develops themes that join the dots, all over the world.