Trends

Originally published in the November issue of the Smart Signals newsletter

On March 13, 2024, the European Parliament formally adopted the EU Artificial Intelligence Act in a landslide victory: 523 votes in favor, only 46 against. While it became official law in 2024, August 2025 marked the beginning of its visible effects in the workplace.

This is the world’s first comprehensive horizontal law governing AI — and it’s expected to become the de facto global standard for AI regulation, much like GDPR has for data privacy.

If your company uses AI to hire, track performance, or automate decisions, this law applies to you. Even if you’re not based in the EU.

Here’s what you need to know.

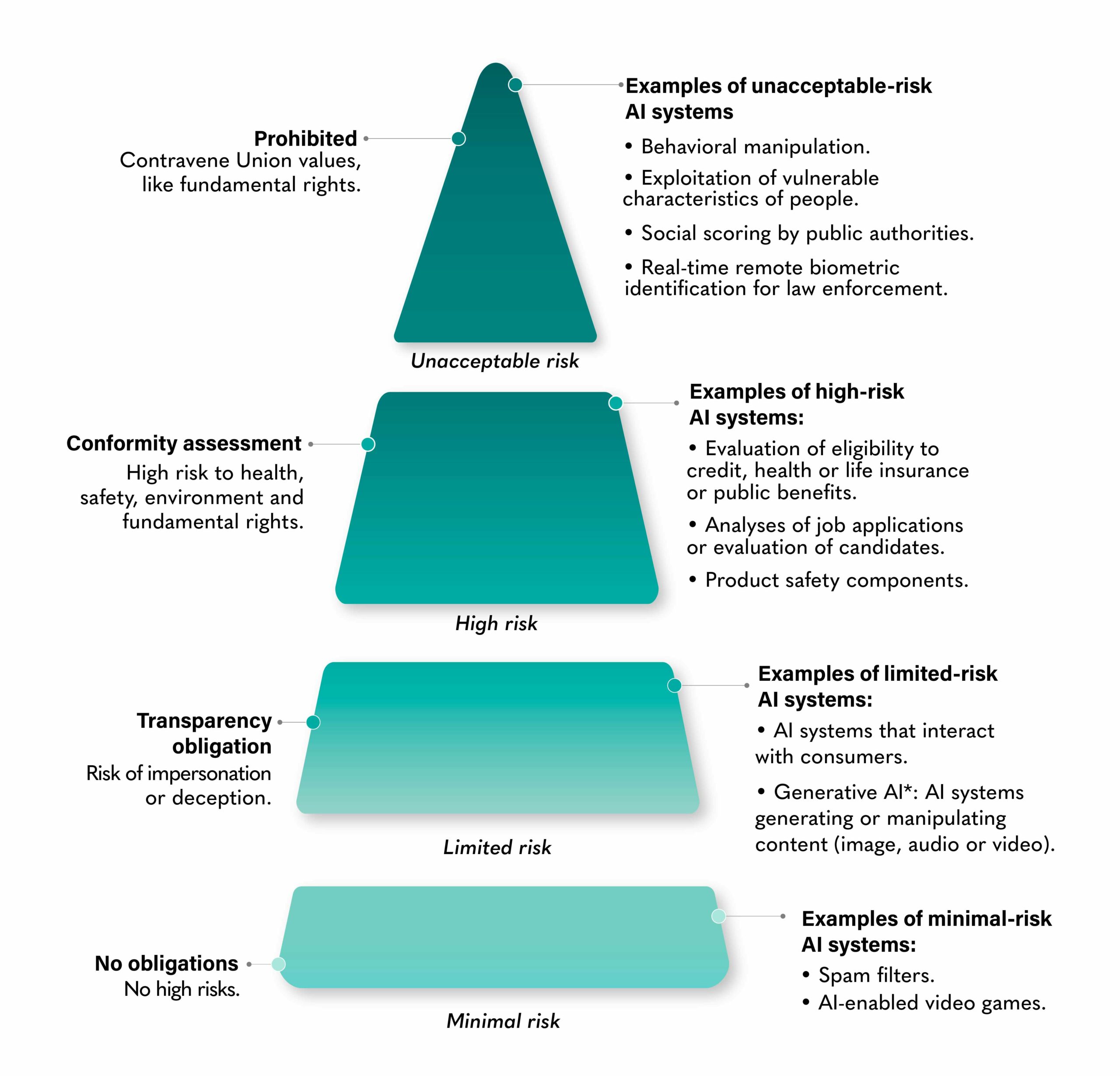

The Foundation: A Risk-based Approach

The AI Act doesn’t treat all AI systems equally. Instead, it classifies them into four risk categories, each with different obligations.

5 Ways the AI Act Will Directly Impact Your Work Life

1. AI in Hiring Just Got Complicated

If you’re using algorithms to shortlist candidates or score résumés, those tools are now high-risk AI systems. You must:

- Complete fundamental rights impact assessments

- Ensure human oversight in all hiring decisions

- Keep detailed records and logs

- Inform all candidates about AI use

- Monitor for bias continuously

- Be ready to explain decisions

Bottom line: Automated screening isn’t impossible, but it requires significant compliance infrastructure.

2. Performance Monitoring? Watch Your Back (Legally)

Workplace surveillance tech — from productivity trackers to facial recognition — falls under high-risk AI. Emotion recognition in the workplace is prohibited (with narrow medical/safety exceptions).

Task allocation and performance evaluation systems require:

- Documented risk management

- Human oversight mechanisms

- Employee notification

- Regular bias monitoring

3. Transparency Isn’t Optional Anymore

Employees must be clearly informed when AI is making decisions about them — from promotions to task assignments to performance reviews.

If you’re using AI chatbots for internal support, employees must know they’re not talking to a human.

4. Training Required

Starting February 2025, employers using high-risk AI must train staff on:

- How the AI system works

- How to interpret its outputs

- When and how to intervene

- How to identify potential errors or bias

This isn’t optional guidance — it’s a legal requirement.

5. Unions and Work Councils Get a Voice

Before deploying any high-risk AI tools, companies must consult employee representatives.

This represents a fundamental shift in workplace power dynamics — technology deployment is no longer a unilateral management decision.

Who Does This Apply To?

The AI Act has extraterritorial reach — like GDPR before it:

- Any provider placing AI systems on the EU market, regardless of location

- Any provider of AI systems located outside the EU whose system output is used in the EU

- Any importer or distributor making AI systems available in the EU

- All users of AI systems within the EU

What this means: if your AI system’s outputs are used in the EU, you’re covered by the AI Act — no matter where your company is based.

Who governs AI in Europe

To make sure the AI Act is more than just words on paper, the EU has built a full governance ecosystem — a network of institutions meant to coordinate, supervise, and guide AI development across Member States.

- At the center is the AI Office, a new EU body dedicated to deepening institutional expertise and ensuring compliance with the regulation, especially for general-purpose AI models.

- Every Member State must also appoint at least one national authority to oversee the implementation of the AI Act. These bodies must be independent, well-resourced, and skilled in AI, data protection, and cybersecurity. They report to the Commission every two years and can offer tailored guidance to startups and SMEs.

- To bring external knowledge into the process, the EU is also creating an Advisory Forum — a diverse group of representatives from industry, civil society, and academia that will provide technical insight and recommendations to the Commission.

- Supporting enforcement, a Panel of Independent Experts will help identify emerging risks, develop evaluation tools, and ensure impartiality in the assessment of AI models.

- Finally, coordination across the Union will be led by the European Artificial Intelligence Board. Composed of national representatives and EU institutions, the Board will harmonize regulatory practices, promote AI literacy, and help the Commission apply the Act consistently across Europe.

Together, these bodies aim to make AI governance in the EU not just reactive, but proactive — combining regulation, expertise, and collaboration to keep pace with technological change.

Enforcement & Penalties: They’re Not Joking

The AI Act introduces a tiered system of sanctions designed to be effective, proportionate, and dissuasive — while still taking into account the realities of startups and SMEs.

- Non-compliance comes at a steep cost. Violating the outright bans listed under Article 5 can result in fines of up to €35 million or 7% of global annual turnover, whichever is higher.

- Other breaches — such as failing to meet provider obligations, transparency requirements, or conformity assessments — can lead to penalties of up to €15 million or 3% of global turnover.

- Even providing misleading or incomplete information to regulators carries consequences, with fines reaching €7.5 million or 1% of annual turnover.

In short, the AI Act sets a new benchmark: compliance is no longer optional, and accountability is priced accordingly.

Timeline: When Everything Happens

- February 2025: The general provisions and obligations related to prohibited practices

- July 2025: The Code of Practice was published

- August 2025: Institutional-level governance; The sanction mechanism; Rules related to general-purpose AI models

- August 2026: Almost all the regulation comes into force, with exceptions to some system (see Aug. 2027)

- August 2027: High-risk AI used as a safety component

The Code of Practice helps industry comply with the AI Act legal obligations on safety, transparency and copyright of general-purpose AI models. The General-Purpose AI (GPAI) Code of Practice is a voluntary tool, prepared by independent experts in a multi-stakeholder process, designed to help industry comply with the AI Act’s obligations for providers of general-purpose AI models.

The Global Picture: AI Laws Around the World

The EU isn’t alone in regulating AI. According to the OECD’s live repository, there are now over 1,000 AI policy initiatives across 69 countries.

Top 10 Countries by Number of AI Policies (2024):

- United States: 82 policies

- European Union: 63 policies

- United Kingdom: 61 policies

- Australia: 44 policies

- Germany: 38 policies

- Portugal: 36 policies

- Turkey: 36 policies

- France: 34 policies

- India: 32 policies

- Colombia: 30 policies

Four Categories of AI Policies Globally:

- Governance Policies: National strategies, public consultations, AI coordination bodies, use in public sector

- Guidance & Regulation Policies: Emerging regulations, oversight bodies, standards and certification

- AI Enablers & Incentive Policies: Networking platforms, data access, research infrastructure, skills and education, public awareness

- Financial Support Policies: Research grants, business R&D funding, fellowships, procurement programs, centers of excellence

Key insight: Most countries prioritize governance policies over strict regulation.

What Does This All Mean?

This is a shift in how work is managed and who stays in control. The EU wants humans in the loop, especially when technology affects jobs, dignity, or rights.

According to KPMG and University of Queensland research:

- 3 in 5 people are wary about trusting AI systems

- 71% expect AI to be regulated

- Even tech CEOs have called for greater AI regulation

The AI Act responds to these concerns by establishing clear guardrails without stifling innovation. It aims to balance:

- Fostering AI adoption and investment

- Protecting fundamental rights and democracy

- Ensuring safety and trustworthiness

- Creating a harmonized EU market

The practical reality:

- AI in HR isn’t going away — but it’s getting serious oversight

- Transparency is becoming mandatory, not optional

- Employee rights are being reinforced through technology regulation

- Companies that ignore this will face consequences that exceed GDPR penalties

- AI is also reshaping workplace safety: its use for surveillance is restricted, but exceptions exist when it protects lives

The AI Act redefines how we think about and manage AI — similarly to what happened with data privacy after GDPR. It’s not just about compliance checkboxes. It’s about fundamentally rethinking the human-machine relationship in the workplace.