Technology

Recent advances in machine translation reflect the growing capacity of artificial translation technologies to match words or expressions in a source language with those of a target language. While these advances are specific to the field of language translation, they reflect nonetheless basic technological developments in a branch of artificial intelligence (AI) known as natural (human) language processing. Hardly surprising, this dependence of machine translation on natural language processing is a manifestation of the fundamental bond tying translation to language and an indicator of the delicate nature of machine translation. For, at some basic level, language is itself a translation. The life events it captures or mediates and the emotions, feelings and thoughts it conveys inescapably undergo a transformation at the very moment they are transposed into the medium of verbal signs. Things we say or write are already reshaped by language and its sociocultural embedment. This is perhaps the meaning of Franz Kafka’s reputed claim that “all language is a poor translation.”

These observations indicate that language never exactly maps what is supposed to be ‘out there’ despite our basic needs to describe things truthfully and talk or communicate in ways that bind us to others and the world. Sometimes ago, Umberto Eco wrote in his “A Theory of Semiotics” that it is not possible to speak, write and signify without the simultaneous capacity to lie, forge or dissimulate. All these fundamental human faculties, Eco noted, rest on the association of words to things in ways that entail strong elements of cultural creation and deliberation. Words or signs are social, cultural conventions, and their making and use always entail an act of choice. A similar claim has been put forward in one of the most imaginative texts written in the history of western thought, namely Gregory Bateson’s “A Theory of Play and Fantasy“. Signifying is not possible without negating or dissimulating the real, Bateson says, without making things signs. As socially made entities, signs provide the foundations for other more subtle and pervasive linguistic conventions such as metaphors, whereby one world context is related to another thus producing a series of associations whereby diverse areas of life or meaning are at the same time distinguished and brought together.

This dependence of machine translation on natural language processing is a manifestation of the fundamental bond tying translation to language and an indicator of the delicate nature of machine translation. For, at some basic level, language is itself a translation.

These are essential remarks, in fact presuppositions, to the task of understanding the real challenge that machine translation confronts. If words are clear cut, unambiguous and largely independent from one another, then the acts of speaking, writing or communicating entail the straightforward mapping of things or states (including feelings, thoughts or emotions) to words or signs. In the first years of machine translation decades ago, AI embraced (perhaps for practical reasons) such simple and mechanical view of translation and signification. Translation was engineered as a system of rules, performed upon unambiguous vocabularies. In this undertaking, the meaning of thousands of words in source and target languages was clearly defined in advance and input into the AI system that was instructed to match one word or phrase in the source language to those of the target language.

It is hardly surprising that these early AI efforts quickly came to a stall. Language is too ambiguous to be squeezed into these neat and rather sterile practices. Words can hardly be separated from one another; sentences and phrases built upon or flow into each other and associations made on a literal but also metaphorical plane. Current advances of machine translation have made significant progress in handling some of the inherent ambiguity of language by mathematically modelling how words can be part of several contexts. It is possible now to let machines discriminate between the difference nuances, say, the word chair acquires by being part of such diverse contexts such as home, a theatre hall, classroom, restaurant or train compartment. It is also possible to let machines discriminate between literal and metaphoric planes such as, say, “burning a chance” and “burning wood”. Instead of using preestablished rules for handling matching between fixed vocabularies in a source and a target language, recent translation technologies use massive examples of pairs of words, phrases or sentences across the two languages from existing documents or sites (e.g. Wikipedia) as a way of training machines to ‘recognize’ when these words occur together.

Machine training is thus accomplished by using massive sources of data and content that are made sense statistically; that is, by counting how often words occur together. The machine is exposed to thousands and millions of examples and uses statistics (probability theory) to ‘learn’ the many different circumstances in which the matching of one word or phrase with another transpires (e.g. a pasta dish and restaurant). Once the training is completed, the ‘learning’ can be applied in new occasions outside the context of training. As in human primary socialization, learning this way inevitably embodies all kinds of predilections and biases present in the data and content used to train the machine.

But when we claim that machines learn, we basically mean the calculation of probabilities that two words or phrases are likely to be associated with one another. In the case of translation, these probabilities are used to match a word or phrase from a source language to a target language. The outcome is often stunning and, occasionally, admirable. But it is vital to point out that machines neither speak nor translate in the sense humans do by imagining, rehearsing, juxtaposing and reconstructing the contexts in which the statements of the two languages (source and target) occur. Since machine translation together with question answering/content creation (Large Language Model) are part of big money today, it is of utmost importance to point out that machines do not understand the ways humans do, contrary to what Google or others business actors may let us believe. Machines only compute or calculate.

But it is vital to point out that machines neither speak nor translate in the sense humans do by imagining, rehearsing, juxtaposing and reconstructing the contexts in which the statements of the two languages occur.

These observations are essential for appreciating the economic and organizational implications of machine translation. While machine translation is bound to diffuse, the texts resulting from AI-based translation systems will most often require human editing and some sort of final expert inspection as regards their meaningfulness and contextual relevance. This is the inescapable outcome of our conclusion that machines do not translate in ways that do justice to the ambiguous nature of language but assemble texts in terms of probabilities of tokenized words and phrases. If this is true, then the diffusion of machine translation in firms and organizations will bring about hybrid forms of human/machine collaboration and a new division of labour between humans and machines. The outcome of these developments, some of which are already on their way, will likely be far more delicate than the prevailing and, in some sense, simplified debate of AI job substitution (AI stealing jobs) versus augmentation (AI helping humans to perform better) indicate. Firms, organizations and state agencies whose operations entail multilingual contexts will naturally experience most dramatically the repercussions of these developments. There will be significant shifts in costs and time dedicated to translation, new tasks developing around the management of multilingual texts and a shifting landscape of jobs and responsibilities that at the moment may be hard to foreshadow.

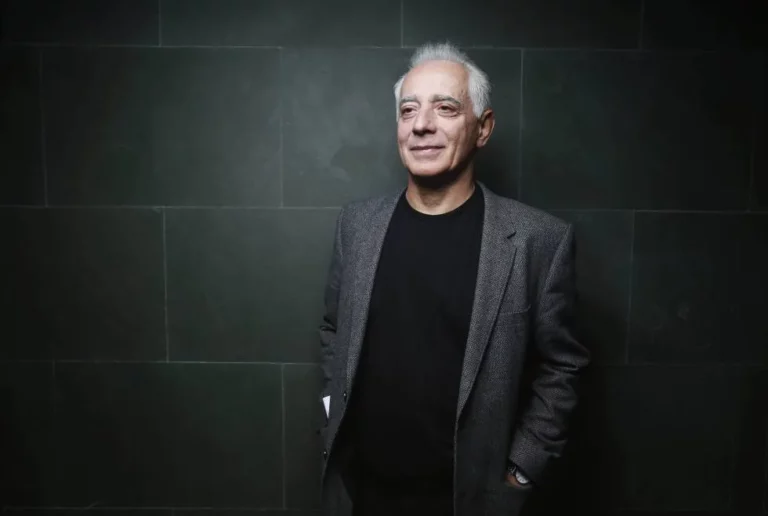

Jannis Kallinikos

Professor at Luiss University and at London School of Economics

Jannis Kallinikos is professor at Luiss University and professor emeritus at London School of Economics. His research focuses on the study of information and communication technologies and the effects their diffusion has upon the functioning of institutions and the economy.