Trends

Decades of digital innovations have created wonderful opportunities and transformed the organizational features of multiple dimensions of human life. But the latest innovations connect and integrate with the very cognitive abilities of humans. At the frontier is the design of sociotechnical systems whose value lies in the full integration of the faculties of machines and humans, who are united in the need to pursue the ambitious goals required to tackle the challenges of the 21st century.

The structure of technological innovation is evolving. In the realm of digital technologies, the paradigm shift is profound. Previously, the technical design of innovation took precedence over any consideration of its societal impact. Today, that approach seems inadequate. The societal challenges posed by innovations in recent decades have escalated into significant economic, cultural, geopolitical, psychological, and democratic concerns. These challenges are likely to intensify with the emergence of advanced forms of artificial intelligence. The resolution of these issues will undoubtedly shape the society of the future. This underscoresthe pressing need for reflection: how will the design of digital technologies adapt? Can we anticipate the consequences of technologies before they are deployed?

Undoubtedly, innovations like search engines, social networks, smartphones, digital maps, online marketplaces, cryptocurrencies, machine translations, and many others have been revolutionary. For years, the structure of such innovations has followed a three-step development process: initially, create the innovative idea; next, promote its adoption among as many individuals and organizations as possible; and lastly, tackle its societal implications. This method has indeed expedited the rate of innovation. However, it’s now worth questioning if this approach has also produced improvements in our quality of life.

Digital technologies have undeniably consumed an increasing portion of people’s time and attention. Yet, the quality of these engagements remains debatable. Workers worry about machines taking their jobs, citizens are inundated with misinformation and hate speech, and consumers are often burdened with tasks that used to be managed by businesses.

Amid these challenges, a shift is evident. Society is adapting to an environment where nearly everything pertaining to information and knowledge operates on digital infrastructures, and relationships are mediated by giant platforms. Governments are increasingly stepping in, guiding these platforms to prioritize user security and rights, curb their monopolistic tendencies, foster competition, and mitigate the social and economic risks propelled by their technologies. China’s approach is more assertive, the United States leans towards negotiations between the government and platforms, while Europe strikes a balance between the two. However, regulations alone aren’t the answer. It is more likely that subsequent innovations will address the challenges posed by their predecessors. But this may lead to better solutions if the process by which new technologies are conceived, designed, developed, evaluated, incentivized, and used is reviewed. Why has this become so important right now? What is special about the frontiers of technological innovation?

Technologies invariably influence society. However, the closer their interaction with humans, the greater the need for meticulous design.

Technologies invariably influence society. However, the closer their interaction with humans, the greater the need for meticulous design. For instance, when it comes to health, as seen in pharmaceutical technologies, rigorous design ensures minimal undesirable side effects. In the food sector within developed nations, products are crafted to offer consumers complete transparency about the supply chain.

And when safety is paramount, as with cars and even more so with airplanes, they’re designed adhering to the strictest safety standards for passengers. These examples illustrate how society has devised methods to ensure technological systems prioritize user welfare.

With the latest digital technologies serving as cognitive tools, a paradigm shift is needed for several reasons:

- The true value of these technologies lies in their relationship with humans. It’s the users who shape, enhance, and redefine them, playing a pivotal role in the service they offer.

- These technologies often act as extensions of the human body, interfacing directly with essential brain functions and subtly influencing behaviors in ways users might not fully comprehend.

- Network dynamics result in dominant technologies eclipsing their rivals, leading to monopolistic scenarios that may not always foster a healthy environment for human cultural growth.

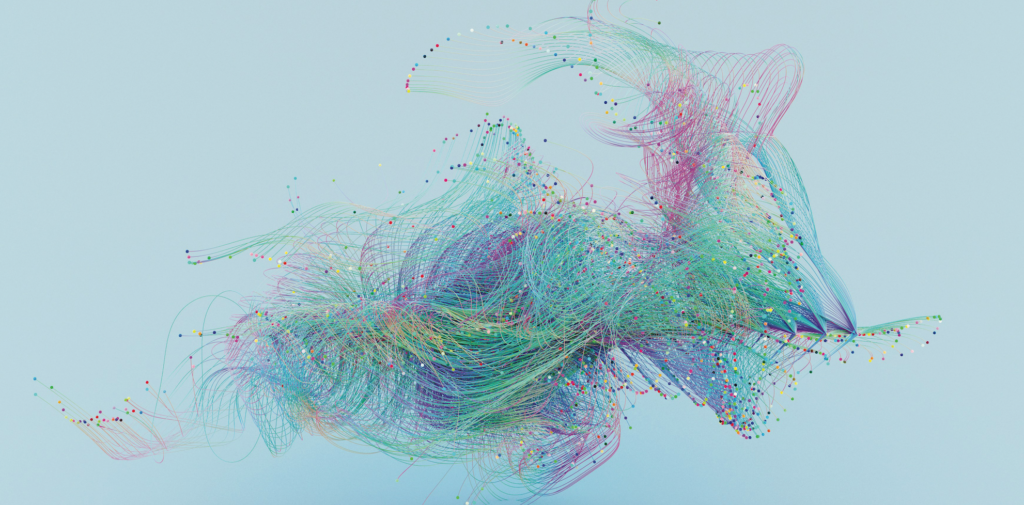

Indeed, groups of humans and technologies that function as cognitive aids co-evolve, forming complex systems. The crucial shift lies here: design considerations are now centered on the crafting of these complex systems, not just their technological aspects.

To foster outcomes with a beneficial developmental trajectory, the focus isn’t merely on designing machines. Instead, it’s on designing socio-technical systems comprising both machines and humans.

Symbiotic Connections

Imminent’s Annual Report 2024

A journey through neuroscience, localization, technology, language, and research. An essential resource for leaders and a powerful tool for going deeper in knowing and understanding the perceived trade-off between artificial intelligence and humans and on their respective role in designing socio-technical systems.

Secure your copy now!The issue deserves a conceptual premise.

Gry Hasselbach, co-founder of Data Ethics, a Danish think tank, writes: «The internet, for example, constitutes a type of applied science and knowledge written down in models, manuals and standards and practiced by engineers and coders, but it is also the result of use in various cultural settings, and it embodies social and legal requirements, political and economic agendas, as well as cultural values and worldviews».

Hasselbach then points out: «Technological or scientific processes are not objectively given or representative of natural facts and a natural state of affairs. On the contrary, these very processes and facts are conditioned by their place in history, culture and society and may also be challenged as such». These clear ideas form the basis of the paradigm shift: the design of socio-technical systems involves both machines and people, so that it can achieve radically new results, but with integrated responsibility for their consequences.

In this perspective, science and engineering join social physics, as MIT’s Alex ‘Sandy’ Pentland, founder of the discipline of the same name, would say. Design is evaluated not only in terms of how systems work, but also in terms of their ethical, epistemological and social quality. The design of socio-technical systems directly affects people’s lives: designers therefore take responsibility for the consequences of what they do. Clearly, there is a need to rethink the system by which these systems are designed, incentivised, evaluated, disseminated and further innovated.

Pentland spoke with Imminent about the genesis of successful innovation. «On the one hand, you need to quantify human behavior in order to graft its characteristics into the socio-technical systems to be designed. On the other hand, it is necessary for the designs to set goals that are substantially aligned with human interests. An example that is as important as few others is the system of sustainability goals set by the UN: they are of extraordinary human importance, and human progress in the 2030 Agenda is measured quantitatively. The authors of the 2030 Agenda have been able to work on quantifying the results of social innovations».

In order to achieve such integration and avoid unintended negative outcomes, it is necessary, on the one hand, to have value-driven participation of the various stakeholders and, on the other hand, to measure and design the capabilities of machines in a way that puts the perspective of humans and the environment first.

Obviously, this approach finds in mathematics a common language between those who work on machine engineering and those who work on social analysis, but it can only work if the goals are also common. It is not a question, reasons Pentland, of building artificial intelligence in isolation from the people who will use it. It is a question of building an integrated system that achieves the goals that people want. Otherwise, it won’t work for the common goals and will be counterproductive. He states: «In order to achieve such integration and avoid unintended negative outcomes, it is necessary, on the one hand, to have value-driven participation of the various stakeholders and, on the other hand, to measure and design the capabilities of machines in a way that puts the perspective of humans and the environment first. The systems we build should serve to augment human intelligence and the wisdom of cultural traditions, rather than attempting to be non-human artificial intelligences».

The aspiration to augment human intelligence and uphold cultural wisdom is especially pertinent to sociotechnical systems facilitating translations.

Pentland states: «While sociotechnical systems can be engineered to overcome language barriers in real time, their effectiveness relies on their ability to act as cultural bridges. Their aim should be to enrich intercultural experiences. If they overshadow linguistic diversity or diminish the vibrancy of different languages, they are fundamentally flawed.» The benchmark for success must align with the most noble and equitable objectives. Designers can no longer claim neutrality be- hind their creations, deflecting accountability onto the users. The responsibilities lie with the designer. «If I were a translator, I would be worried, but not too worried,» says Pentland.

As technological systems grow increasingly powerful, could the equilibrium shift towards heavier machine involvement, leading to a significant decline in the role of humans?

It might not be beneficial for those crafting effective sociotechnical systems to lean in this direction. Dino Pedreschi, a professor at the University of Pisa and a pioneer in data mining and AI rooted in human-centric values, tells Imminent that he believes so:

«Having smart individuals and sophisticated machines doesn’t automatically yield intelligent sociotechnical systems. Designers need to factor in the feedback loops inherent in any intricate system, as these can often lead to cognitive degradation. If prevalent language models today are engineered to appeal to the average user by rounding off the edges of their responses, they might produce a quantity of conformist texts ready for publication. Should the next generation of such AIs be trained on content generated by these models, their ‘average’ tendencies could become even more pronounced».

Photo Credit: And-Machines – Unsplash

Like Pentland, Pedreschi maintains that diversity is vital for sustaining valuable sociotechnical systems: «This is especially true in translation. If machine translation algorithms are trained on text already translated by other automated systems, and thus for example biased towards mainstream interpretations, the resulting quality may decline. An excess of conformity in translations might initiate a negative feedback loop, exacerbating challenges in capturing linguistic nuances. In reality, retaining human translators serves as a protective measure against this possible systemic degradation of machine translation». Pedreschi further argues that it’s crucial for anything crafted by AIs to be labeled accordingly, ensuring it isn’t mistaken for human-produced content.

Pentland believes diversity is fundamental to valuable collective intelligence. In networks, certain behaviors can foster a robust exchange of ideas among a select group, while others can promote increasing isolation. Both of these tendencies, whether creating echo chambers or promoting disconnection, negatively affect the quality of the decisions that emerge.

And, importantly, these effects can be measured quantitatively.

Pedreschi suggests that potential interfaces between human translators and machines could guide both toward optimal decisions. «For example,» he suggests, «machines can present draft translations with color-coded segments based on the machine’s confidence in the accuracy of its translation. This will allow human translators to focus on parts of the text that are less likely to be correct.» But how can the machines measure these probabilities? «If the training texts show considerable variation in the translation of certain sentences, the probability of accuracy will be lower,» Pedreschi explains. «Conversely, if the sources are consistent, it’s more likely that those parts of the text are unproblematic. Throughout this process, it’s important to include humans with different linguistic and cognitive skills. If we rely solely on machines trained on homogeneous texts, we risk the aforementioned problems of conformity.»

This solution is only a glimpse into the complex realm of sociotechnical systems design, a methodology that is still in its infancy and requires further exploration. Pentland envisions that this methodology must inherently allow for the continuous reevaluation of previously obtained results, given the ever-evolving nature of sociotechnical systems.

The human element within these systems continually introduces small and significant changes. This is particularly evident in language. As a dynamic entity shaped by societal shifts, language serves as a historically contextualized tool that allows humans to encode and share a portion of their knowledge. Consequently, Pentland posits that socio-technical systems should be modular, allowing for easy quantitative assessment of each module’s performance. This allows for timely revision of algorithms that may be underperforming.

More critically, the design of the system and its individual modules should be directly and transparently aligned with the overarching goals. This ensures that any module that needs to be redesigned or modified can be identified quickly. «To be clear,» adds Pentland, «software-based modules work together with human-based modules to execute the combined algorithms of the human-machine system. Adapting human modules may require reorganizing or even retraining individuals. And such changes can only be implemented effectively if people involved have a clear understanding of how they fit into the larger goals of the system.»

In such complex systems, the objective is to strike the right balance

between conformity and diversity.

If all this is true, the problem will be designing sociotechnical systems that pass a set of strategic tests. «We can establish sandboxes or controlled environments to conduct smaller-scale experiments,» suggests Pedreschi. «Different algorithms can be trialed with various user groups to assess the quality of outcomes produced by each.» Pentland adds: «The testing process typically begins with simulating key components, followed by the entire system. It culminates in launching a pilot program within select communities, essentially setting up a controlled experiment where participants provide informed consent. This evaluation isn’t just a preliminary step; it must persist even after the system is rolled out on a larger scale. Conditions change, and to remain relevant, continuous adjustments and refinements to the system are essential.»

This requires a steady influx of human intelligence, ensuring we avoid the pitfalls of unchecked self-learning that could foster conformity and stifle innovation, as Pedreschi highlighted: «In such complex systems, the objective is to strike the right balance between conformity and diversity.» Moreover, Hasselbalch notes that issues related to “data pollution” stem from underlying biases within the data. Detecting these “biases” demands that the system and its outcomes undergo ongoing quality monitoring.

In the realm of translation systems, the human modules can be responsible for instructing and supervising the learning processes of the technological modules. They also play a crucial role in diversifying the range of experiences and perspectives present in the texts used for software training.

The richness of language can be nurtured by translators but also by skilled users and other stakeholders, according to algorithms and methods yet-to-be designed. As Hasselbalch points out, laws and ethical standards serve as behavioral frameworks for those crafting sociotechnical systems. This normative challenge permeates every facet of technology development, emphasizing the importance of a sociotechnical approach to those still entrenched in a purely technological design mindset.

The translation system is one of the earliest examples of intentionally designed socio-technical systems. Insights gained from its development offer invaluable lessons for various domains. In turn, the field of translation can further benefit from a systematic approach to aligning sociotechnical systems with well-defined and quantifiable goals.

Ultimately, the goal of such advances is to facilitate more equitable and informed human decision-making. Producers of sociotechnical systems share a degree of responsibility for the choices made by their users, which are influenced by the quality of the services provided. This shared responsibility underscores the imperative for continuous improvement, a pursuit that should be enthusiastically embraced by those who manage these sociotechnical systems.

About the interviewed

Alex Pentland

Pentland began his career as a lecturer in both computer science and psychology at Stanford University before joining MIT in 1986. At MIT, he held prominent roles at the Media Laboratory, the School of Engineering, and the Sloan School. Apart from academic roles, he has served on several advisory boards, including the UN Global Partnership for Sustainable Development Data and the OECD. He co-founded the Media Lab Asia laboratories and has been involved with multiple startups. As arecognized figure in computer science, Pentland is highly cited and played significant roles in discussions leading to the EU’s GDPR and the UN’s Sustainable Development Goals. His ideas are in his book “Social Physics. How Social Networks can make us Smarter”, Penguin 2014 and in Pentland’s contribution to the book by Cosimo Accoto, Il mondo in sintesi. Cinque brevi lezioni di filosofia della simulazione, Egea 2022.

Dino Pedreschi

Dino Pedreschi is a distinguished computer science professor at the University of Pisa, renowned for his work in data science and AI. He co-directs the Pisa KDD Lab, a collaboration between the University of Pisa and the Italian National Research Council, focusing on big data analytics, machine learning, and AI’s societal impact. Pedreschi has contributed to areas like human mobility, sustainable cities, social network analysis, and data ethics, and is at the forefront of Human-centered Artificial Intelligence through the European Humane-AI network. He founded SoBigData. eu, a significant European research infrastructure for big data analytics. Additionally, Dino plays a pivotal role in Italy’s AI strategy, advising the Italian Ministry of Research and leading the Data Science PhD program at Scuola Normale Superiore in Pisa.

Gry Hasselbalch

Gry Hasselbalch is a prominent author and scholar specializing in data and AI ethics and governance. With a career spanning over 20 years, she adeptly merges policy and academic perspectives, having influenced major global policy dialogues on AI and data. As the Research Lead for the EU’s InTouchAI.eu initiative until July 2024, she guides research activities and aids the European Commission in international collaborations on AI ethics and regulation. Hasselbalch was instrumental in the creation of the EU’s AI ethics guidelines through her role in the EU’s High-Level Expert Group on AI from 2018-2020, which paved the way for the AI Act. Additionally, she is part of the team responsible for the EU-US Technology & Trade Council Joint Roadmap on Trustworthy AI and Risk Assessment. Her ideas are in her book: Data Ethics of Power. A Human Approach in the Big Data and AI Era, Edward Elgar Publishing

Luca De Biase

Editorial Director

Journalist and writer, head of the innovation section at Il Sole 24 Ore. Professor of Knowledge Management at the University of Pisa. Recent books: Innovazione armonica, with Francesco Cicione (Rubettino, 2020), Il lavoro del futuro (Codice, 2018), Come saremo, with Telmo Pievani (Codice, 2016), Homo pluralis (Codice, 2015). Member of the Mission Assembly for Climate-Neutral and Smart Cities, at the European Commission. Co-founder of ItaliaStartup Association. Member of the scientific committee of Symbola, Civica and Pearson Academy. Until January 2021 he has chaired the "Working Group on the phenomenon of hate speech online", established by the Minister of Technological Innovation and Digitization, with the Ministry of Justice and the Department of Publishing at the Presidency of the Council. He has designed and managed La Vita Nòva, a pioneering bi-monthly review for tablets, that has won a Moebius Award, 2011, in Lugano, and a Lovie Award, 2011, in London. His work has been honored with the James W. Carey Award for Outstanding Media Ecology Journalism 2016, by the Media Ecology Association.