Trends

“Technologists cannot simply leave the social and ethical questions to other people, because the technology directly affects these matters.”

Tim Berners-Lee

A gallery of bold ideas from a set of cultural leaders to understand the social meaning of AI.

The success of new artificial intelligences has reinvigorated the discussion on language as a synthesis of human experience. Today, this synthesis takes center stage because artificial intelligence learns by assimilating all recorded human knowledge and delivers it back to each person interacting with it in the form of a “hyperlanguage”: a fusion of expressive forms made possible by the ability to translate messages and meanings across all the world’s languages and formats, from texts to images, videos to software, and more.

However, since language synthesizes human experience and AI connects all languages, technology cannot be conceived without being immersed in social dynamics. Thus, any specialized design loses its meaning unless it is part of a reflection on the complex socio-technical system in which humans coevolve with their machines.

In these pages, a brief overview of major thinkers and their contributions to understanding the present, crucial era is provided. Their key lesson is that any development in the role of artificial intelligence will result from a deep interaction between social and technological dynamics.

Artificial intelligence primarily demonstrates a new stage in humans’ ability to create tools, but this time humans have surpassed themselves. Despite not fully understanding how their biological brains function, humans have created a digital imitation of its workings so perfect—and still improbable—that it prompts some observers to suggest that the creation may surpass its creator, a challenging paradox to unravel.

Listening to or reading these experts on the relationship between humans and their sophisticated machines leads to a couple of conclusions:

- Humans will project many of their cognitive experiences onto thinking machines, initially revealing the ancient doubts they harbor about their own identities and seeking answers in the mirror of artificial intelligences, only to discover that they have renewed themselves.

- Artificial intelligences that think by mimicking the neural circuits of biological brains remain less efficient than their human counterparts because they need more information and more energy to learn, yet they grow faster. And if humans so desire, they will develop the capability to set their own goals, marking their own coming of age.

The point is that the communities designing these machines must develop the awareness that the machines’ goals mustremain subordinate to human objectives.

This requires a rapid leap in quality in the design of the machines and the social organizations that adopt them. Through this transition, humans will further evolve, learning to perform actions they could not have achieved without the new machines, enhancing their traditional performances, and inventing new ways to innovate.

MUSTAFA SULEYMAN

Mustafa Suleyman is the CEO of Microsoft AI. He was previously co-founder of Inflection and of DeepMind. He brings 15 years of experience as an entrepreneur, technologist and leader in artificial intelligence. He is on the board of directors of The Economist and is a Senior Fellow at the Belfer Center for Science and International Affairs at the Harvard Kennedy School.

But Mustafa, what is an AI anyway?

One morning over breakfast, my six-year-old nephew Caspian was playing with Pi, the AI I created at my last company, Inflection. With a mouthful of scrambled eggs, he looked at me plain in the face and said, “But Mustafa, what is an AI anyway?” (…)

I think AI should best be understood as something like a new digital species. Now, don’t take this too literally, but I predict that we’ll come to see them as digital companions, new partners in the journeys of all our lives. (…)

They communicate in our languages. They see what we see. They consume unimaginably large amounts of information. They have memory. They have personality. They have creativity. They can even reason to some extent and formulate rudimentary plans. They can act autonomously if we allow them. And they do all this at levels of sophistication that is far beyond anything that we’ve ever known from a mere tool. And so saying AI is mainly about the math or the code is like saying we humans are mainly about carbon and water. It’s true, but it completely misses the point. (…)

Since the beginning of life on Earth, we’ve been evolving, changing and then creating everything around us in our human world today. And AI isn’t something outside of this story. In fact, it’s the very opposite. It’s the whole of everything that we have created, distilled down into something that we can all interact with and benefit from. (…)

Here’s what I’ll tell Caspian next time he asks. AI isn’t separate. AI isn’t even in some senses, new. AI is us. It’s all of us.

Abstract

What is an AI anyway. TED Talk, May 2024

In his May 2024 TED Talk, Mustafa Suleyman explores the evolving concept and impact of artificial intelligence (AI). He begins with a story about his nephew, Caspian, who prompts the question, “What is an AI anyway?” This innocent inquiry leads Suleyman into a deep discussion about the transformative potential of AI technologies.

Suleyman predicts a future where AI becomes ubiquitous through cloud-based supercomputing, much like how browsers emerged with the internet. He envisions AIs as conversational interfaces, each unique and capable of immense knowledge, accuracy, and emotional intelligence. These AIs won’t just be tools; they will act as personal tutors, medical advisors, and strategic consultants—available at any moment.

A key concept introduced by Suleyman is the “actions quotient” or AQ, which refers to an AI’s capability to perform tasks in both digital and physical realms. He imagines a world where every entity — from individuals to governments— will have their own AI, transforming these entities into companions and partners that profoundly integrate into daily life. These AIs will manage tasks from the mundane to the complex, aiding everything from local community events to global scientific advancements.

However, Suleyman warns of the potential risks associated with such powerful technology. He advocates for a thoughtful approach to AI development, suggesting that we should view them as a new type of digital species rather than mere tools. This perspective underscores the need for prioritizing safety and human agency to manage the inevitable unintended consequences.

Concluding his talk, Suleyman reflects on the broader existential and philosophical implications of AI. He argues that AI is not an external force but a continuation of human evolution and creativity, encapsulating the entirety of human achievement and potential. He emphasizes that as we develop AI, we must ensure it reflects the best of humanity—our empathy, curiosity, and creativity.

Suleyman’s vision is both a caution and a beacon of hope, illustrating AI’s potential to mirror and amplify the best aspects of human nature, making it not only a technological revolution but a deeply human one as well.

“He emphasizes that as we develop AI, we must ensure it reflects the best of humanity—our empathy, curiosity, and creativity”.

Symbiotic Connections

Imminent’s Annual Report 2024

A journey through neuroscience, localization, technology, language, and research. An essential resource for leaders and a powerful tool for going deeper in knowing and understanding the perceived trade-off between artificial intelligence and humans and on their respective role in designing socio-technical systems.

Secure your copy now!GEOFFREY HINTON

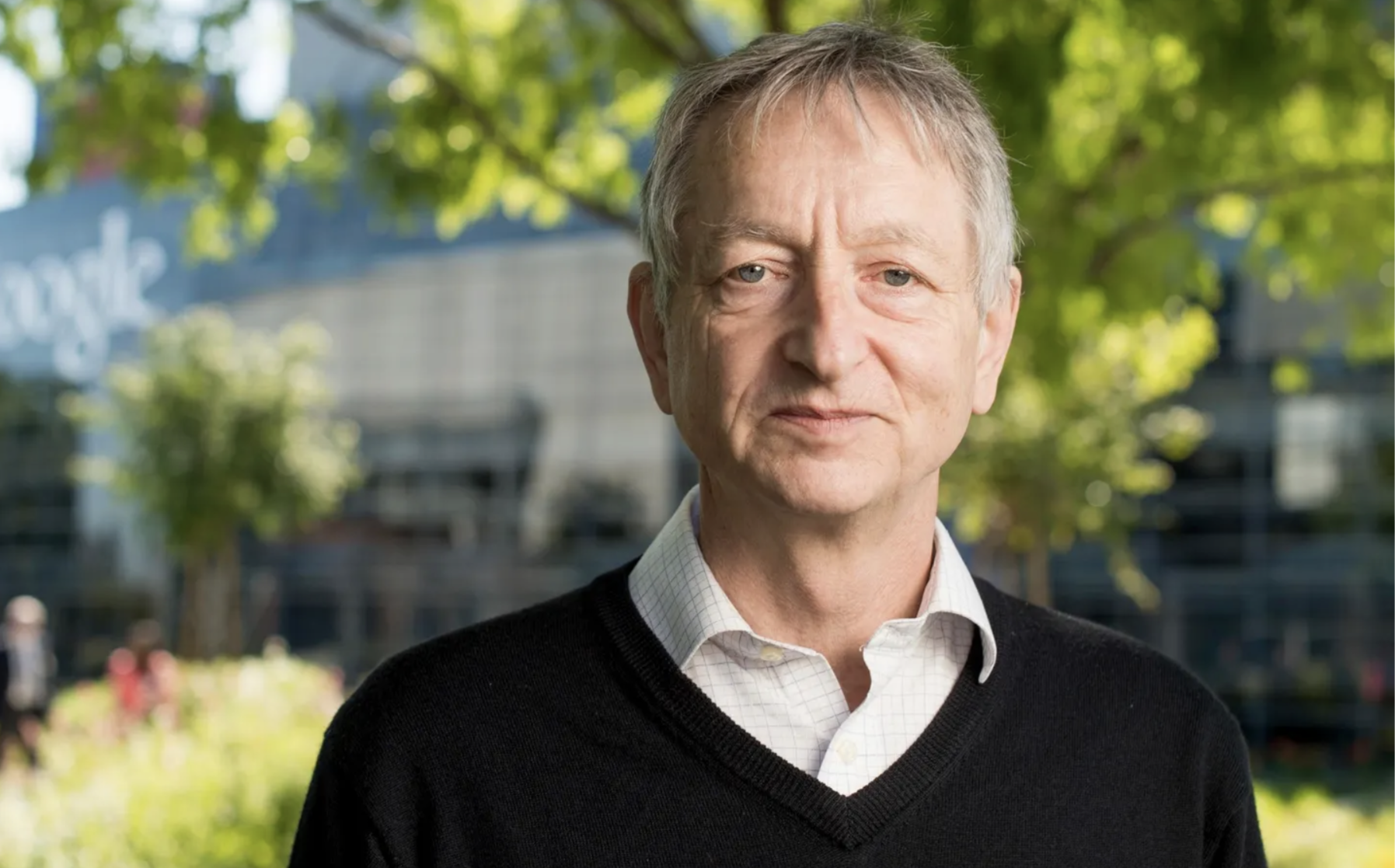

Geoffrey Everest Hinton is a British-Canadian computer scientist and cognitive psychologist, most noted for his work on artificial neural networks. From 2013 to 2023, he divided his time working for Google and the University of Toronto. In 2017, he co-founded and became the chief scientific advisor of the Vector Institute in Toronto. Hinton received the 2018 Turing Award, often referred to as the “Nobel Prize of Computing”, together with Yoshua Bengio and Yann LeCun, for their work on deep learning. They are sometimes referred to as the “Godfathers of Deep Learning”, and have continued to give public talks together.

Will digital intelligence replace biological intelligence?

So since the 1950s, there have been two paradigms for intelligence. The logic inspired approach thinks the essence of intelligence is reasoning, and that’s done by using symbolic rules to manipulate symbolic expressions. (…) The biologically inspired approach is very different. It thinks the essence of intelligence is learning the strengths of connections in a neural network. (…)

The idea that a big neural network with no innate knowledge could actually learn both the syntax and the semantics of language just by looking at data was regarded as completely crazy by statisticians and cognitive scientists. I had statisticians explain to me that a big model has 100 parameters. The idea of learning a million parameters is just stupid. Well, we’re doing a trillion now. (…)

The thing that probably worries me most, is that if you want an intelligent agent that can get stuff done, you need to give it the ability to create sub goals. So if you want to go to the States, you have a sub goal of getting to the airport and you can focus on that sub goal and not worry about everything else for a while. So superintelligences will be much more effective if they’re allowed to create sub goals. And once they are allowed to do that, they’ll very quickly realize there’s an almost universal sub goal which helps with almost everything. Which is: get more control. (…)

Biological computation is great for evolving because it requires very little energy, but my conclusion is that digital computation is just better. And so I think it’s fairly clear that maybe in the next 20 years, I’d say with a probability of .5, in the next 20 years, it will get smarter than us and very probably in the next hundred years it will be much smarter than us. And so we need to think about how to deal with that. And there are very few examples of more intelligent things being controlled by less intelligent things. And one good example is a mother being controlled by a baby. Evolution has gone through a lot of work to make that happen so that the baby survives. It is very important for the baby to be able to control the mother.

Abstract

Romanes Lecture, Oxford, February 2024

This lecture provides a comprehensive overview of artificial intelligence (AI), focusing on neural networks, the evolution of machine learning, and potential threats posed by AI technologies. The speaker begins by differentiating between logic-inspired and biologically-inspired paradigms of intelligence, emphasizing the latter’s focus on learning through neural network connections.

They explain the function and structure of neural networks, detailing how they learn to recognize objects through input, hidden, and output layers. The lecture highlights the effectiveness of backpropagation over older methods like the mutation method, significantly enhancing learning efficiency.

The speaker also discusses the groundbreaking achievements in image recognition by neural networks, facilitated by significant advances made by their students.

They address the skepticism of symbolic AI proponents regarding neural networks’ capabilities with language, emphasizing the successful application of large language models (LLMs) like GPT-4 in understanding and generating language based on vast data inputs and complex feature interactions.

Addressing potential AI risks, the lecture touches on issues like misinformation, job displacement, surveillance, and autonomous weaponry, highlighting the long-term existential risks AI might pose, including the potential for AI to gain autonomous control.

The talk concludes with insights into the computational advantages of digital over analog neural networks, suggesting that digital systems, despite their high energy consumption, offer superior knowledge sharing and learning capabilities.

This prepares the audience for a future where AI could surpass human intelligence, urging consideration of control and ethical implications as AI continues to evolve.

JENSEN HUANG

Jensen Huang founded NVIDIA in 1993 and has served as President, CEO, and a member of the Board of Directors since the company’s founding. In 2017, Fortune named him Businessperson of the Year. In 2019, the Harvard Business Review ranked him as the top CEO in the world.

AI Translates in a Hyperlanguage

What happened was we now have the ability to have software that can understand. We digitized everything. We digitized genes, but what does it mean? What does it mean that we digitized amino acids? What does it mean that we digitized words, we digitized sounds, and videos? But what does that mean?

We have the ability from a lot of data and from the patterns and relationships, we now understand what they mean, with the computer. Now that we understand what they mean, we can translate them. Because we learned about the meaning of these things in the same world, we didn’t learn about them separately: we learned about speech and words and paragraphs and vocabulary in the same context. And so we found a correlation between.

So now not only we understand the meaning of each modality, but we can understand how to translate between them: and so for obvious things you could caption video to text, text to video as MidJourney, text to text, ChatGPT. So we know that we understand meaning, and we can translate.

The translation of something is the generation of information. And you have to take a step back and ask yourself what is the implication in every single layer of everything that we do? (…)

What it means is that the way we process information, fundamentally, will be different in the future. The way we do video or build chips and systems will be different. The way we write software will be fundamentally different in the future.

Abstract

From an article about the speech Jensen Huang gave to SIEPR Economic Summit, March 2024.

At the 2024 SIEPR Economic Summit, Nvidia CEO Jensen Huang, delivered a compelling forecast about the future of artificial intelligence. Addressing a full house, Huang made striking predictions about AI’s capabilities, suggesting that within the next five years, AI will surpass human performance in all forms of testing, including advanced medical licensing exams — a significant leap from today’s AI, which can already complete legal bar exams.

Looking further ahead, Huang envisions a decade from now where AI’s computational power will be a million times greater than it is currently. These future systems will not only generate and learn from synthetic data but will also possess the capacity for sustained critical thinking and imagination, transforming how they tackle complex, long-term problems.

This marks a substantial evolution from the current functionalities of models like ChatGPT.

During a keynote Q&A with John Shoven, emeritus senior fellow at SIEPR and Charles R. Schwab Professor of Economics at Stanford, Huang discussed the profound changes in human-AI interaction that these advancements could bring. However, he also addressed the uncertainties surrounding the definition and achievement of human-like intelligence in AI, emphasizing the need for a clear consensus on what constitutes true artificial general intelligence.

Known for his unique sense of humor, Huang also shared personal anecdotes and advice at the summit. He encouraged resilience and preparedness to face setbacks, jokingly wishing “ample doses of pain and suffering” for Stanford students aspiring to entrepreneurship, highlighting that overcoming adversity is key to achieving greatness.

ETHAN MOLLICK

Associate Professor of Management at the Wharton School of the University of Pennsylvania, where he studies and teaches innovation in entrepreneurship and examines the effects of artificial intelligence on work and education. In addition to his research and teaching, Ethan also leads Wharton Interactive, an effort to democratize education using games, simulations, and AI. Ethan’s latest book is Co-Intelligence: Living and Working with AI. He is also the author of a popular blog, OneUsefulThing, which has more than 134,000 followers.

Co-Intelligence. Living and Working with AI

As alien as AIs are, they’re also deeply human. They are trained on our cultural history, and reinforcement learning from humans aligns them to our goals.

They carry our biases and are created out of a complex mix of idealism, entrepreneurial spirit, and, yes, exploitation of the work and labor of others. In many ways, the magic of AIs is that they can convince us, even knowing better, that we are in some way talking to another mind. And just like our own minds, we cannot fully explain the complexity of how LLMs operate.

There is a sense of poetic irony in the fact that as we move toward a future characterized by greater technological sophistication, we find ourselves contemplating deeply human questions about identity, purpose, and connection. To that extent, AI is a mirror, reflecting back at us our best and worst qualities. We are going to decide on its implications, and those choices will shape what AI actually does for, and to, humanity.

Abstract

From a blog post dedicated to how Mollick used AI for writing his book

The author’s blog post provides insightful answers to the intriguing questions raised about the integration of AI in the writing process and its broader implications:

- How can AI enhance the creative process of writing a book?

AI enhances the creative process by acting as a tool to assist with specific tasks rather than taking over the writing itself. The author uses AI to generate alternatives, refine prose, and overcome creative blocks, thus augmenting the creative flow rather than replacing the human element. - What are the practical applications of AI in interacting with literature?

The practical applications of AI in interacting with literature, as demonstrated by the author, include tools that allow readers to engage with the book’s content on a deeper level. AI tools developed by the author help readers analyze, apply, and interact with the text, thereby enriching the reading experience through enhanced interactivity and accessibility. - How does one effectively integrate human and AI capabilities in a work process?

The effective integration of human and AI capabilities is realized through a balanced approach that the author terms as ‘Centaur’ and ‘Cyborg’ work styles. In the ‘Centaur’ approach, tasks are strategically divided between the human and AI based on their respective strengths. In contrast, the ‘Cyborg’ approach involves a more intertwined relationship where tasks flow back and forth between human and AI, enhancing efficiency and creativity. - Can AI truly replicate or surpass the nuanced capabilities of human writing?

While AI has made significant advancements and can mimic certain aspects of human writing, the author believes that it currently does not replicate or surpass the nuanced capabilities of skilled human writers. AI can assist greatly but still falls short in achieving the top tiers of creative writing, which require a deep understanding of context, nuance, and emotional depth. - What is the future of authorship in an AI-augmented world?

The future of authorship, according to the author, is likely to continue involving significant human input, especially in creative fields. Although AI’s capabilities are rapidly advancing, and its role in the writing process will grow, the essence of creativity, nuanced expression, and personal touch in authorship are expected to remain predominantly human endeavors. AI will augment and assist but not fully replace the creative instincts of human authors.

These answers reflect a nuanced understanding of AI’s role as a tool that complements human creativity rather than acting as a replacement, pointing towards a future where AI and humans collaborate more deeply across various professional and creative domains.

NAOMI S. BARON

Naomi S. Baron is a linguist and professor emerita of linguistics at the Department of World Languages and Cultures at American University in Washington, D.C. Baron’s current research is on artificial intelligence and writing. Her newest book is Who Wrote This? How AI and the Lure of Efficiency Threaten Human Writing, (Stanford University Press 2023).

How AI and the Lure of Efficiency Threaten Human Writing

Since Turing’s time, a persistent question has been how much we’re looking for intelligent machines to replace humans and how much we seek collaboration. Today, economists like Erik Brynjolfsson urge us to distinguish between automation (replacement) and augmentation (collaboration). Brynjolfsson calls the automation model “the Turing Trap.”

He argues that the goal in AI shouldn’t be building machines that match human intelligence (passing all manner of Turing tests) but programs giving human efforts a boost. (…)

Think about the ways humans might team up with Al. The purpose might be for Al to assist humans in a task. Turn the tables, and human effort could be funneled into improving the way the Al does its job. A third option: human-AI co-creation. (…)

Human – computer interaction. Human-entered Al. Humans in the loop. Does it matter what we call human – machine collaboration? Linguists aren’t the only ones caring about semantics. So do politicians and writers. “Incursion” and “assault” both accurately label an invasion, but the connotations are starkly different.

The same goes for deciding to talk about “humans in the loop” or “AI in the loop.” (…)

We talked about fears that Al might outsmart us and take over control. The new tack is to insist that humans remain at the helm, to have Al serve us and not vice versa. I’m reminded of those tripods Homer describes in the Iliad, scuttling about as they deliver food to the deities. Today’s Al servants are infinitely smarter, but the goal is to keep them subservient. Partners, maybe, but junior partners.

Abstract

Who Wrote This? How AI and the Lure of Efficiency Threaten Human Writing – Stanford University Press 2023

The text discusses the symbiotic relationship between humans and artificial intelligence (AI), exploring various applications, historical perspectives, and future potentials. Here’s a detailed summary of the comprehensive discussion from the pages you provided:

- AI in Creative Writing and Journalism:

AI, particularly a tools like GPT-3, is used to assist in creative writing and journalism, enhancing creativity and productivity. These AI tools help in generating content, overcoming writer’s block, and even acting as co-authors, enabling a collaborative writing process that can yield results beyond individual human capability. - Human-Centered AI and Ethics:

The discussion emphasizes a human-centered approach to AI, advocating for “humans in the loop” to ensure that AI supports rather than replaces human efforts. This perspective is critical to maintaining control over AI and ensuring that it enhances human capabilities without causing ethical dilemmas. - AI in Mental Health:

AI applications in mental health, such as therapeutic chatbots that provide cognitive behavioral therapy, demonstrate how AI can effectively support mental health treatments. These tools offer accessibility and consistency in delivering therapeutic advice, often proving as effective as human therapists in certain contexts. - Historical Context of Automation Fears:

The text reflects on historical automation fears, such as those arising from the invention of the printing press, to contextualize modern concerns about AI replacing human jobs. This historical perspective is used to argue that, like previous technological advancements, AI should be integrated in ways that complement rather than replace human roles. - AI in Coding and Chess:

AI’s role extends to technical fields like coding, where it helps optimize and streamline processes, and in games like chess, where AI has both competed against and cooperatively worked with human players to enhance game strategies. - AI Augmentation vs. Replacement:

The concept of the “Turing Trap” is introduced, discuss- ing the potential pitfalls of AI fully replacing human roles. The text argues for AI as an augmentation tool that enhances human efforts, particularly in fields re- quiring creative and cognitive skills. - Collaborative AI Applications:

Examples of AI collaboration include improvisational theater, where AI suggests lines to actors, and in pro- gramming, where platforms like GitHub and Codex by OpenAI facilitate coding projects. These examples il- lustrate AI’s role as a partner in creative and technical endeavors.

Photo Credit: Martin Martz – Unsplash

Luca De Biase

Editorial Director

Journalist and writer, head of the innovation section at Il Sole 24 Ore. Professor of Knowledge Management at the University of Pisa. Recent books: Innovazione armonica, with Francesco Cicione (Rubettino, 2020), Il lavoro del futuro (Codice, 2018), Come saremo, with Telmo Pievani (Codice, 2016), Homo pluralis (Codice, 2015). Member of the Mission Assembly for Climate-Neutral and Smart Cities, at the European Commission. Co-founder of ItaliaStartup Association. Member of the scientific committee of Symbola, Civica and Pearson Academy. Until January 2021 he has chaired the "Working Group on the phenomenon of hate speech online", established by the Minister of Technological Innovation and Digitization, with the Ministry of Justice and the Department of Publishing at the Presidency of the Council. He has designed and managed La Vita Nòva, a pioneering bi-monthly review for tablets, that has won a Moebius Award, 2011, in Lugano, and a Lovie Award, 2011, in London. His work has been honored with the James W. Carey Award for Outstanding Media Ecology Journalism 2016, by the Media Ecology Association.