Localization

Historical evidence – Indic computing before Unicode and OpenType

Before the Internet arrived, all reading used to happen through printed books, magazines or newspapers. Publishers often used different styles of text and established their own identity. When the world of publishing adopted computers, the Desktop Publishing industry continued to develop a variety of fonts and text styles. The number of designers who wanted to develop these styles and calligraphy grew in every region and language across the country. However, this was “before” the OpenType standard existed.

The technology and evolution of OpenType was supposed to help existing needs by making processes easier and more efficient. Instead, Unicode and OpenType made them excruciating. Most publishers resisted having online versions because it compromised their style and identity due to technical limitations.

Computers are not ancient devices, and the use of Indian languages on computers was not a prehistoric attempt that required reinvention with Unicode or OpenType. The Indian publishing industry, government offices, PSUs and several large enterprises that introduced computers into their operations early needed to create communications and publications in large quantities in Indian languages, even before Microsoft had developed its first version of Windows. What did they use? Did they face the same difficulties?

oXXIgen

Imminent Research Report 2022

GET INSPIRED with articles, research reports and country insights – created by our multicultural interdisciplinary community of experts with the common desire to look to the future.

Get your copy nowIndian language computing celebrates its 50th anniversary in 2020. The first integrated Devanagari computer was developed in 1983. The Indian script encoding standard ISCII (Indian Script Code for Information Interchange) along with the standard keyboard layout INSCRIPT were officially released by the BIS (Bureau of Indian Standards) in 1988. This standard was produced by a group of scientists at IIT Kanpur who studied all the major Indo-Aryan scripts, their behavior and the computing requirements and developed the standard over a period of one and a half decades. The ISCII standard document released by the BIS was not simply a list of characters. It covered every aspect of the script’s properties and outlined the principles and rules that had to govern the script’s behaviors in computing in order for its implementation to be unambiguous, intuitive and efficient. A very pertinent analogy to this is the parallel encoding standard for English, ASCII (American Standard Code for Information Interchange), released by the ANSI (American National Standards Institute). This was first published in 1963 and was heavily revised in 1970 after 7 years of in-depth study. 7 years of investigation to decide the encoding of a linear script that had 26 letters in lower and upper cases, 10 digits, punctuation, some mathematical symbols and the American currency symbol, all totaling 96 characters. It took that long because it was important to decide the chronology. Should the sequence of digits precede or follow the letters? Where should the punctuation go? Where should the symbols go? All of this so that when the numeral values are sorted, the result is a natural sequence intuitive to users. While maintaining all of these, the special positioning of the lower and upper case characters – a property specific to the Latin script – had to be considered. The system is designed in such a way that the lower and upper cases can be equaled by masking just one fixed “bit”. When Unicode incorporated English, ASCII was adopted “as is” and none of the advantages of ASCII were affected.

Indian scripts are different from Latin scripts and have very different properties. Equally in-depth study and reflection was needed to develop an efficient encoding and principles that would last.

Several Indian companies developed software following the ISCII standard and the principles outlined. A font standard (although not officially released by the Bureau), the ISFOC (Indian Script Font Code), was also widely adopted in this software. The ISFOC defined a finite set of glyphs (192) and a fixed layout (arrangement) that could be followed by font designers and software developers alike to create compatible fonts and display engines. This made it very simple and straightforward, and many font styles were developed by numerous designers for each language in various parts of the country.

The governments, enterprises, PSUs and the publishing industry used these solutions. Computers were expensive, but many small publishing businesses took out loans to set up Desktop Publishing centers. These centers would compose, print and publish anything from small business journals or promotional leaflets to theses or sizable volumes. Some people who could afford computers at home used this software to create literature. The software didn’t pose any such issues as highlighted above, and the text created was completely noise-free.

In fact, at a release event in Odisha where Microsoft unveiled the Odia spell checker support in MS Office about a decade ago, the spell checker identified a misspelled three-letter word but offered 36 suggestions, with the most appropriate and obvious one appearing somewhere in the last quarter of the list. At the same meeting, an astute Indian software dealer demonstrated a word processing software called “Apex Language Processor” that ran in a 16-bit environment on DOS, running with only a few hundred kilobytes of memory, supporting a full 10 million inflected vocabulary terms for spell checking the Odia language (among several other languages including English) and offering the most accurate and appropriate suggestions. The dealer threw the software open to the experts to evaluate and compare.

Odia, as a language, had comparatively low commercial potential, but was nonetheless implemented to these advanced levels 30 years ago.

Why did this happen? What are the limitations? How were Indian languages used on computers before Unicode and OpenType? What can we do now?

We have been endeavoring to persuade policymakers and our ecosystem partners to introduce India-led changes in the Unicode standard, as this is clearly hampering India’s linguistic landscape.

We have tried to capture the major challenges arising from the three standards that are followed to enable the use of Indian languages on digital devices:

- Unicode – the encoding of characters for various scripts.

- OpenType – the font format that contains the glyphs (shapes) and the rules that apply for the selection and placement of glyphs to display characters and combinations.

- INSCRIPT – the keyboard layout.

If the above standards are implemented properly, a system will enable a user to type, view and edit text.

Since all the major operating systems implement these standards for Indian languages in full detail, to explain what is amiss, let us elaborate with an example.

oXXIgen

Imminent Research Report 2022

GET INSPIRED with articles, research reports and country insights – created by our multicultural interdisciplinary community of experts with the common desire to look to the future.

Get your copy nowEditing issues

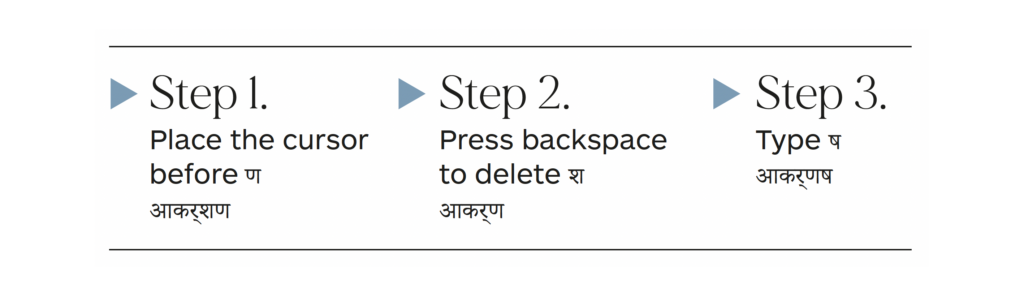

While typing, if a user needs to correct the word आकर्शण by replacing the श with ष, how does one do it on different devices or operating systems (OS) such as Windows, Apple iOS, Android, etc.? While this is a simple example of editing, all the fundamental uses of computing such as sorting, searching, indentation or the development of advanced computing such as NLP services (machine translation, speech to text, text to speech, text analysis algorithms, etc.) for Indian languages pose significant issues.

For example, here is a step-by-step attempt on Windows 10:

Restrictions on font styles

If a student or professional wants to use specific font styles for their website, blog or document, none of the Indian languages offers even a handful of styles on any platform. They cannot even seek the services of a good designer to create or offer different font styles.

Causation

Unicode and OpenType were first introduced about two decades ago. But over 500 million Indian users who have access to digital mediums struggle with the basics of using their languages while the world speeds past them in English and various other languages. OpenType was developed jointly by Microsoft and Adobe.

Interestingly, to this day, most PDF creators or readers are able to do complete justice to the Indian language format. This makes some of the most widely used document formats absolutely unusable for anything other than reading.

Unicode and OpenType have not been progressive or advanced. These standards had a different agenda, which they continue to serve. Unicode was formed as a consortium of American companies that wanted to create a universal character encoding for all world scripts so that software and hardware could be developed with multilingual environments for different regions of the world. This objective of Unicode also meant that it had a corollary goal of replacing all other character encoding schemes across the world. The American companies wanted to be compatible with each other for different regions, but in isolation.

Unicode

The first version of Unicode released in 1991 listed characters used as standards elsewhere in the world. This included all the Indian scripts encoded in ISCII. Unicode acknowledged that these were based on the ISCII 1988 standard released by the BIS. However, since the consortium’s decisions for all scripts were made by a handful of people who may not have been native speakers of the languages they were considering or who may not have had sufficient knowledge, research or understanding, a series of blunders were committed:

- Researchers in India, focused on the standards and all aspects of computing for Indian languages, were working on and released a revision of ISCII in 1991 (the same year Unicode was released), but they were never consulted by the Unicode consortium, nor did Unicode ever consider revisions of ISCII in subsequent versions of Unicode.

- Unicode adopted ISCII erroneously in many ways. The universal encoding that was listed in a representative column, which only “looked” like Devanagari for ease of understanding of the ISCII standard document, was adopted as the “actual” Devanagari character set.

- The Assamese script was merged into Bangla without making any effort to understand why the two were different scripts. Even with the parallel of Devanagari, which is used for seven different official languages in India and yet is maintained as “one” script in ISCII, the fact that Assamese and Bangla were listed as two scripts was overlooked.

- Special characters, their use and the limitations outlined were ignored or misinterpreted, and erroneous parallels were introduced in Unicode.

- All aspects of Indian language properties, rules and principles to be adopted to understand the characters and implement them in computing were omitted from the standards document. Indian languages are not used as a list of characters. And picking “only” the list of characters without the other details was as good as chopping the limbs off the torso of the character encoding standard.

- A lack of definitions and ambiguity with character behavior led to the creation of noisy text that apparently looked similar to clean text. This has filled India’s linguistic digital data with a lot of noise, making it inefficient to use for all computing needs such as sorting, searching, and all NLP.

English maintained every thoughtful consideration incorporated into ASCII when adopted into Unicode. But Indian scripts lost it all, and for decades, there has been a constant struggle to try and debate in committees, conferences and forums, with proposals to improve an aspect here and there for each script separately, inching forward but never reaching the goal.

Also, with the openly stated objective to encode every script, Unicode has offered a lofty honor to researchers who have taken pride in finding obscure or archaic characters to introduce into each script, proudly turning their proposals into an international standard. This false pride makes the consortium’s entire operation an academic farce in the face of the practical needs of today, where for decades students and users have been losing the chance to use their languages properly. The fact is that Unicode is adopted for its popular reach. But it isn’t a standard. It is a consortium decision that has no agenda for different languages or their speakers and users.

OpenType

OpenType is a font format for scalable fonts based on the TrueType structure. OpenType was defined jointly by Microsoft and Adobe and is the most popular format used in Windows, Linux and Android operating systems. For more than a decade, Unicode was not even adopted by the consortium’s founding members. Though there is no recorded reason, the eventual implementation of Unicode support for Indian scripts through OpenType made it evident that such an approach wouldn’t have been possible to implement in the computers of the 1990s, which had low memory and processing abilities compared to those that came later. In fact, no such font format has been developed for any fixed size or bitmap fonts. However, this apart, OpenType introduced a long list of insurmountable issues. Here are some of the major ones:

- Since the OpenType format was designed for use with Unicode text and Unicode omitted the properties and characteristics of the characters encoded, OpenType failed to unambiguously define the rules to be applied. That made the design of fonts in different languages extremely complex and still unreliable.

- As a font, OpenType is a collection of shapes (glyphs). The classification and rules apply over the glyphs. On the other hand, Indian languages classify the behavior of characters (script grammar). This limitation makes it impossible to implement the appropriate text rendering behavior through the font alone.

- While OpenType allows users to write rules and then generalize them, it is impossible to constrain any behavior that is undesired. There is no possibility to handle anything that is beyond the rules, and this cannot be generalized.

- The sequence of character combinations is unlimited, making it impossible for the font developers to generate and validate all the combinations. In fact, the initial release of OpenType required font makers to create all fallback combinations. Even after decades of revisions of the OpenType format, a font for any Indian script is designed with over 500 glyphs compared to around 190 in other font formats. Such a nightmarish experience of designing OpenType fonts made almost all font designers quit designing. And the handful of font makers who were bold enough to attempt it never offered any guarantee as to the fonts’ behavior across all platforms. The fonts are also at least 20 times more expensive than any other font format. The cost, unreliability and complexity made it prohibitive for the industry to consider font options altogether.

- Designing and rendering OpenType fonts requires software that is very expensive in terms of resources. They need advanced hardware and a lot of memory. Microsoft, one of the co-authors of the OpenType font format, developed its own Uniscribe library to render OpenType fonts on Windows operating systems. However, Microsoft could not port the same library to its mobile operating system, and Windows-based cell phones never supported any Indian languages. Even today, software that needs real-time rendering does not support OpenType. For example, the most popular game development library, “Unity”, does not support OpenType. Games in Indian languages cannot be developed using Unity.

Photo credits: Himansu Srivastav, Unsplash